Is ChatGPT Ready to Have a Conversation with your Customers?

ChatGPT has garnered a fair amount of attention lately. Like DALL-E and other AI tools, it has become a topic on both, Wall and Main Streets. Why the chatter? These interfaces begin to reveal the potential application of AI in the hands of business and casual users alike, while at the same time lowering the barrier to entering and exploring such platforms.

The complexity and concerns of enterprises deploying AI evaporate when the average user can easily experience the pre-defined capabilities and experiences that these fascinating AI platforms provide. Indeed, users can talk to ChatGPT in their own vernacular without any specific technical skillset. More so, they can explore a discussion in their own very specific area of knowledge. In fact, this is exactly why Google is ‘code red’, according to a recent article in the New York Times. ChatGPT’s interface could represent a fundamental shift in how a wide variety of users seek and find information on the internet. And in turn, negatively impact Alphabet’s ability to generate advertising revenue.

So, what exactly is ChatGPT?

ChatGPT is a new model based on a third-generation “foundational” language prediction model called GPT-3 that was created by OpenAI a few years ago. Its large language model has been heavily supervised, trained, and refined over time with many dialogues by using reinforcement learning over human judgments.

All this training has paid off as ChatGPT impresses users with its uncanny ability to generate text when given a prompt. It claims to be able to engage in conversation with humans. However, as we’ve discovered and documented here, that’s not quite accurate. There is much we can learn from it, but not necessarily answers we can count on.

Our data scientists assessed some of the limits of ChatGPT’s conversational ability by conducting a few tests and comparing its answers to those of plan-based collaborative multimodal dialogue systems', like Openstream’s Eva.™

We’ve determined that this very clever system can make complex and deep associations among words but it does not understand what it is saying. It is disconnected from facts and presents obvious inconsistencies in adjacent sentences. It also masquerades as an agent with mental states, while denying it has them and does not learn from its mistakes.

Let’s explore our analysis (see Appendix) and share a few examples of how we came to these conclusions.

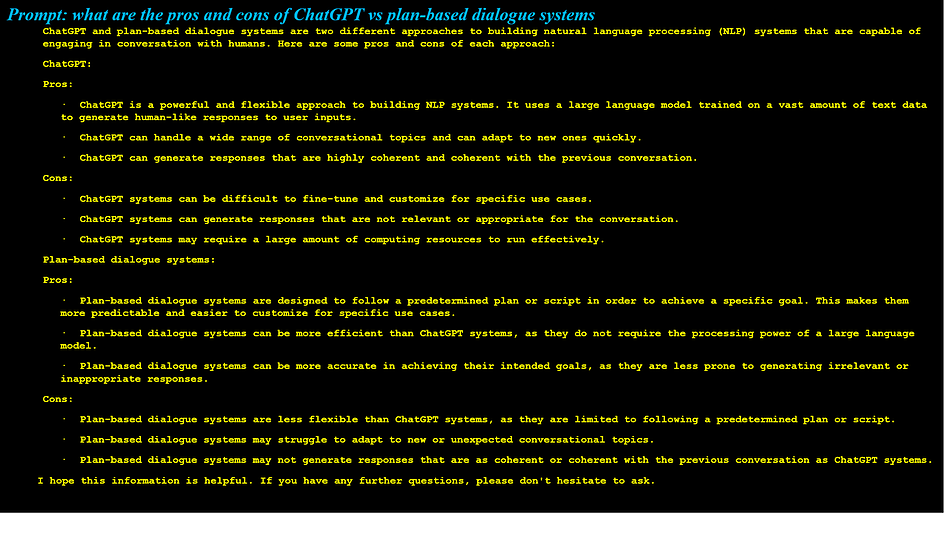

Test: What are the pros and cons of ChatGPT vs plan-based dialogue systems?

Explanation

This example represents a meaningful and coherent analysis of the differences between the two styles of dialogue systems. Indeed, the developers may have added the comparison (during training). ChatGPT’s description of its own behavior seems quite accurate. However, “plan-based dialogue systems,” according to most common definitions today, do not follow a predetermined plan or script, and ChatGPT saying so reflects a bias. Rather, systems such as Eva™ generate, execute, and repair plans. In fact, generating different plans in different circumstances is foundational to the versatility of such systems.

ChatGPT is correct when it claims that plan-based dialogue systems are typically oriented towards goal-achievement and may require unique domain knowledge in order to provide a level of intimacy about the subject matter and the user. ChatGPT’s response also accurately points out that plan-based dialogue systems are more efficient, and more likely to be accurate. Their ability to converse does not depend on momentarily imitating coherence, but on actually establishing it.

ChatGPT does have considerable flexibility in processing natural language inputs and outputs, more than any “conversational” system to date. However, it maintains and relates inconsistent information if we simply ask a question in a different way.

For example, after being corrected by the user about an error in its prior answer about plan-based dialogue systems, in one paragraph it says:

“Thank you for pointing out this error in my previous response. You are correct that plan-based dialogue systems generate, execute, and repair their own plans of action, and do not follow a predetermined script.”

Yet in the next sentence, it says:

“Plan-based dialogue systems are designed to follow a predetermined plan or course of action in order to achieve a specific goal. They use reasoning and decision-making abilities to generate and execute plans, and can adapt and repair their plans as needed in response to new information or changes in the conversation.”

When asked about the inconsistencies in its response, it says:

“As an artificial intelligence, I do not have the ability to detect or recognize inconsistencies in the same way that a human can. My training data consists of a large dataset of text, and I can generate responses based on patterns and associations found in that data. However, I do not have the ability to evaluate the consistency or coherence of my own responses or the responses of others.”

It does not know or understand the meaning of what it is saying! This is a substantial gap and explains why ChatGPT is an inadvisable solution for applications where accuracy and consistency matter. Just imagine having a conversation with an automated health care advisor with, “oops,” an inconsistency. Or, engaging a service provider, such as a mortgage advisor or agent, who makes an offer on a loan application using inconsistent information. The legal and financial implications of deploying such an unpredictable and inconsistent system are vast.

ChatGPT continues with:

“Planning and reasoning are not primary capabilities of chatGPT, which is a conversational language model developed by OpenAI. ChatGPT is primarily designed to generate human-like text based on the input it receives. While it can generate text that may seem to reflect planning or reasoning, it does not have the ability to make actual decisions or solve problems.”

What does this mean? It means that although the system might occasionally appear to be reasoning, it is not. It does not know the meaning of the words and is only making associations among sentences and phrases drawn from its (very large) training corpus.

A critically important aspect of dialogue is reasoning within a conversant set of beliefs and intentions and planning to change them. ChatGPT can generate sentences that describe what people might be intending when saying something, and it is reasonably accurate when doing so. However, because it is not reasoning about what people intend (i.e. it does not actually understand), it is only able to infer likely associations.

ChatGPT is inconsistent in its saying that it (as an entity) does not have intentions:

“As an artificial intelligence, I do not have intentions or motivations in the same way that humans do. I am simply a program designed to process and generate text based on the input I receive.”

Yet in the same dialogue, it states the intention:

“I was intending to provide a list of additional information or services that I might be able to offer.”

And also shares rationalizations:

“My goal was simply to …”

“I was simply trying to …”

As you can see, this highlights contradictions within ChatGPT’s responses by saying that it does not have intentions, yet at the same time, it says it has intentions and goals and is trying to achieve something. And it also says that it does not engage in planning and reasoning.

Unfortunately, these inconsistencies run the risk of misleading users into thinking that they are engaged with a system demonstrating a form of intelligence. And this is simply not the case:

- When told that its responses are inconsistent, it essentially says that it only presents what is in its training set. If the training set is inconsistent, its responses may be as well. But, when errors were pointed out, it uttered factual and incorrect information in adjacent sentences. Again, it does not understand what it is saying when it says it.

- When asked if a user can tell it new information, including correcting its inconsistencies, it says that new information cannot be provided by users. In other words, the system cannot learn by being told. This is a key limitation for any dialogue system, especially a commercial one.[1]

As currently deployed, ChatGPT cannot serve as a reliable core for domain-dependent, mission-critical applications. Even if ChatGPT were connected to backend services that could validate or invalidate its output, we still do not know if it could provide accurate information or could be corrected without retraining. After all, it cannot learn. Indeed, OpenAI itself says not to trust the output of the system. So why should you?

However, it is understandable that developers of a public-facing language model would be reluctant to allow anyone to update its training data, for reasons of safety and cost.

To see what we asked ChatGPT that led us to these conclusions, please see the Appendix.